How to Actually Monitor Competitor Marketing (Without Drowning in Noise)

Most PMMs build competitor monitoring systems that generate alerts nobody reads. Here's how to build a system that surfaces competitive intel you'll actually use.

The PMM showed me her competitor monitoring setup with pride. RSS feeds from 15 competitor blogs. Google Alerts for 8 competitor names. Weekly email digests. A Slack channel that posted every competitor press release, blog post, and product update.

"I never miss anything," she said.

I asked: "When's the last time you acted on something from this system?"

She paused. "Define acted on."

That's the problem with most competitor monitoring systems. They catch everything and surface nothing useful. They generate noise, not intelligence.

I've built monitoring systems that sent me 50 alerts per week that I never read. I've watched PMMs spend hours reviewing competitor content that had zero impact on their strategy or tactics.

Then I learned the difference between monitoring systems that generate activity and systems that generate action. The difference isn't more sources or better tools. It's ruthless focus on the signals that actually matter.

Here's how to build a competitor marketing monitoring system that surfaces intelligence you'll use, not notifications you'll ignore.

Stop Monitoring Everything, Start Monitoring Signals That Change Behavior

The SaaS PMM had set up alerts for everything her top three competitors published: blog posts, case studies, whitepapers, webinars, podcast appearances, social media posts, press releases.

Average alerts per week: 47.

Average alerts she actually read: 3.

Average alerts that changed how she did her job: 0.4.

She was monitoring activity, not signals. Activity is what competitors publish. Signals are changes that require your response.

I sat with her and asked: "What competitor changes actually require you to do something different?"

Her list:

- New product features that change competitive positioning

- Pricing changes that affect deal dynamics

- New customer segments they're targeting

- Messaging shifts that reveal strategy changes

- Partnership announcements that expand their capabilities

Five signal categories. Everything else was noise.

We rebuilt her monitoring system around those five signals only. Alerts dropped from 47 per week to 4 per week. Action rate went from 0.4 to 2.8 per week.

Not because she monitored less. Because she monitored what mattered.

Here's the uncomfortable truth about competitor monitoring: comprehensive coverage correlates inversely with utility. The more you monitor, the less you act on.

Research from competitive intelligence teams shows this pattern: teams monitoring 10+ competitor signals average 8% action rate on alerts. Teams monitoring 3-5 signals average 41% action rate.

Stop trying to catch everything. Start identifying the 3-5 signals that require your response. Ignore everything else.

Your five competitor signals (customize for your market):

- Product capability changes - New features that shift competitive positioning

- Pricing/packaging updates - Changes that affect deal economics

- Target market shifts - New segments or use cases they're pursuing

- Strategic partnerships - Integrations or alliances that expand capabilities

- Executive messaging changes - New narrative in earnings calls, conference talks, or investor updates

Monitor these ruthlessly. Ignore blog posts, social posts, customer testimonials, and minor PR announcements unless they reveal one of these five signals.

Build a Weekly Review System, Not a Daily Alert System

The fintech PMM had real-time alerts set up for competitor mentions. Every press release triggered a Slack notification. Every blog post generated an email. Every social media post appeared in a feed.

She spent 30 minutes per day reviewing alerts. Most days, she took no action. The system created busy work that felt like competitive intelligence but delivered minimal value.

I asked: "What percentage of competitor changes require same-day response?"

She calculated: less than 2%.

A competitor launches a new feature. You don't need to know immediately—you need to know by the time your next sales call happens. A competitor changes messaging. You don't respond same-day—you incorporate it into your next battlecard update.

Same-day alerts create urgency without requiring urgency. They interrupt deep work for information that doesn't require immediate action.

We switched her system from real-time alerts to a weekly review process. Every Friday at 2pm, she reviewed the week's competitor changes in one focused session.

Time invested dropped from 150 minutes per week (30 min × 5 days) to 45 minutes per week (one focused session). Action rate increased because she reviewed everything with context instead of reacting to individual alerts.

The pattern: daily alerts optimize for responsiveness. Weekly reviews optimize for informed decisions.

Unless you're in a market where competitors launch daily and every launch requires immediate response (rare), weekly reviews beat real-time alerts.

How to build a weekly review system:

Pick one day and time. Friday 2pm works well—end of week, before weekend, gives you context from the full week.

Consolidate sources. Pull everything into one location. Don't review 8 different tools—export to a single document or dashboard.

Process in batches. Review all product changes together, all messaging changes together, all partnership announcements together. Patterns emerge faster than reviewing chronologically.

Ask one question per signal: "Does this require me to do something different?" If no, archive it. If yes, create a task immediately.

Set a time limit. 45-60 minutes max. If you can't process the week's signals in an hour, you're monitoring too much noise.

I've used this system for three years. Average review time: 38 minutes per week. Average actions taken: 2.3 per week. Action completion rate: 87%.

Compare to the PMM spending 30 minutes daily reviewing real-time alerts. She invested 3x more time with 65% lower action completion.

Track Messaging Changes, Not Content Publishing

The enterprise software PMM monitored every piece of competitor content. Blog posts, whitepapers, webinars, case studies. She read everything they published.

I asked: "What are you looking for?"

"I want to understand their strategy."

"Have you learned their strategy from their blog posts?"

She admitted: not really. Blog posts reveal content topics, not strategic direction.

Here's what actually reveals competitor strategy: messaging changes in high-stakes environments.

Earnings calls. What executives emphasize when talking to investors shows real priorities.

Conference keynotes. What they lead with in front of potential buyers reveals positioning.

Sales materials. What messages appear in pitch decks and demo scripts shows what's working in deals.

Pricing pages. How they describe value and structure packages reveals monetization strategy.

Job postings. What roles they're hiring for signals strategic bets.

Content marketing reveals content topics. High-stakes messaging reveals strategy.

The PMM shifted from monitoring content to monitoring messaging. Instead of reading 20 blog posts per month, she analyzed:

- Quarterly earnings call transcripts (4 per year)

- Conference keynote recordings (2-3 per quarter)

- Pricing page changes (monitored monthly with screenshots)

- Executive LinkedIn posts (filtered for strategic announcements only)

- Strategic role job postings (VP+ level only)

Time invested dropped 60%. Strategic insight increased measurably—she caught three major strategy shifts in six months that didn't appear in any blog content.

How to monitor messaging, not content:

Track pricing page changes monthly. Screenshot competitor pricing pages first week of every month. Compare month-over-month. Changes in value propositions, package names, or feature emphasis reveal strategic shifts.

Read earnings transcripts, skip blog posts. Public companies reveal real priorities in investor communications. Set calendar reminder for quarterly earnings dates.

Watch conference keynotes at 1.5x speed. Skip to the "what we're announcing" and "where we're going" sections. That's where new messaging debuts.

Monitor executive social media, not company social media. Company accounts publish marketing content. Executive accounts (CEO, CMO, VP Product) signal strategic direction.

Track job postings for strategic roles. Hiring a VP of Enterprise Sales signals enterprise focus. Hiring a Head of Partnerships signals ecosystem strategy. Hiring a Chief AI Officer signals product direction.

You learn more about competitor strategy from one earnings call than from 100 blog posts.

Build a Competitive Timeline, Not a Content Archive

The cloud infrastructure PMM had organized competitor information by category: product features, pricing, messaging, customers, partnerships. Comprehensive taxonomy. Hard to use.

When sales asked "What's changed with Competitor X since we last competed with them?" she couldn't answer quickly. She had to search through multiple categories, compare dates, reconstruct the timeline manually.

The problem: categorical organization optimizes for storage, not retrieval. You need temporal organization that shows change over time.

I helped her rebuild the system as a competitive timeline. One document per competitor. Chronological entries. Each entry: date, change description, source link, "so what" implication.

Example entry format:

Nov 15, 2025 - Product Launch Competitor X launched AI-powered analytics feature. Positions as "ChatGPT for your data." Source: [product announcement] Implication: Now competing on AI narrative. Update battlecard with our ML approach vs. their ChatGPT integration positioning.

Oct 3, 2025 - Pricing Change Reduced enterprise tier from $50K to $35K annually. Added new "growth" tier at $15K. Source: [pricing page archive] Implication: Aggressive downmarket expansion. Sales seeing more competition in $10K-$30K deal range.

Timeline view makes patterns visible. You see the sequence: pricing change in October, product launch in November, new market messaging in December. The pattern reveals strategy.

Categorical organization hides patterns across time. Timeline organization reveals strategic evolution.

How to build a competitive timeline:

One document per top competitor. Don't try to timeline 10 competitors. Focus on your top 3-5.

Reverse chronological order. Most recent at top. You care more about what happened this month than what happened two years ago.

Four fields per entry: Date, Change, Source, Implication. Keep it consistent.

Update weekly. Add entries during your weekly review session. Takes 5 minutes per competitor.

Archive annually. Start fresh doc each year. Archive previous year. Keeps current timeline focused on recent changes.

Share with sales. Timeline format makes it easy for sales to get caught up on competitor changes. "What's new with Competitor X?" = "Read the top 10 entries."

I've maintained competitive timelines for three years. When sales asks "What's changed with our competitors?" I can answer in 60 seconds by reading recent timeline entries.

Compare to the PMM with categorical organization who needed 20 minutes to reconstruct changes across multiple documents.

Focus on Three Competitors, Not Ten

The cybersecurity PMM monitored 12 competitors. She had alerts set up for all of them. Weekly review sessions ran 90+ minutes trying to process updates from a dozen companies.

I asked: "How many of these 12 competitors do you compete with regularly?"

She checked opportunity data. 78% of competitive deals involved the same three competitors. The other nine appeared occasionally but weren't driving daily competitive pressure.

She was spending equal time monitoring all 12 competitors. She should have been spending 80% of time on the top three.

We restructured her monitoring:

Tier 1 (3 competitors - 80% of competitive deals): Weekly review, full timeline, detailed monitoring of all five signal categories.

Tier 2 (4 competitors - 15% of competitive deals): Monthly review, basic timeline, monitor major announcements only.

Tier 3 (5 competitors - 5% of competitive deals): Quarterly review, no timeline, monitor only if they appear in an active deal.

Her weekly review time dropped from 90 minutes to 35 minutes. She had deeper intelligence on the competitors that mattered and stopped wasting time on competitors that rarely appeared.

Research across competitive intelligence teams shows this pattern: effectiveness correlates with focus, not coverage. Teams monitoring 3-4 competitors deeply outperform teams monitoring 10+ competitors broadly.

The uncomfortable truth: comprehensive competitor monitoring is a waste of time. Focused competitor monitoring on the deals you're actually losing is effective.

How to tier your competitors:

Pull last 12 months of opportunity data. Filter for "closed-lost" and "closed-lost-to-competitor."

Count frequency. How many times did each competitor appear?

Calculate percentage. What % of total competitive losses?

Tier based on frequency:

- Tier 1: Top 3 competitors, >70% of competitive losses

- Tier 2: Next 3-4 competitors, 20-25% of competitive losses

- Tier 3: Everyone else, <10% of competitive losses

Allocate monitoring time proportionally: 80% of time on Tier 1, 15% on Tier 2, 5% on Tier 3.

Most PMMs monitor all competitors equally. Smart PMMs monitor the competitors they're actually losing to.

Measure What You Do With Intel, Not How Much Intel You Collect

The marketing ops PMM had impressive metrics for her competitor monitoring system. Sources monitored: 47. Weekly alerts processed: 63. Competitive updates distributed to sales: 12 per month.

I asked: "How do you know if this is working?"

She pointed to the metrics. 47 sources. 63 alerts. 12 updates.

Those are activity metrics. They measure monitoring effort, not monitoring impact.

I asked different questions:

"How many times did competitive intelligence change a sales conversation outcome?"

"How many times did competitive intel inform a product decision?"

"How many times did you update positioning based on competitor changes?"

She didn't know. She measured input (alerts processed) not output (decisions influenced).

We shifted her metrics:

Old metric: 63 alerts processed per week New metric: 2.3 actions taken per week based on competitive intel

Old metric: 12 competitive updates distributed monthly New metric: 78% of sales reps report using competitive intel in deals (measured quarterly)

Old metric: 47 sources monitored New metric: Average 48-hour time from competitor change to updated battlecard

Input metrics feel productive. Output metrics measure impact.

The brutal reality: you can process 1,000 alerts per month and deliver zero value if none of those alerts change what your company does.

How to measure competitive intelligence impact:

Track actions taken, not alerts processed. Every weekly review, log how many competitive signals led to a specific action (update battlecard, change messaging, inform product team, etc.)

Survey sales quarterly. "In the last 90 days, how many times did competitive intelligence help you in a deal?" Track response rate and frequency.

Measure time-to-update. When a competitor makes a major change, how long until your sales team has updated guidance? Track from announcement date to battlecard update date.

Connect to deal outcomes. When competitive intel influences a deal, note it. Track correlation between competitive program investment and win rate in competitive deals.

Calculate time ROI. Hours invested in monitoring divided by actions taken. Goal: <20 minutes invested per action taken.

Most competitive intelligence programs can't prove impact because they measure activity instead of outcomes.

The teams that justify budget, headcount, and executive support are the ones that can show: "We invested X hours in competitive monitoring. It led to Y actions that influenced Z deals worth $W in revenue."

The Monitoring System That Actually Works

After building and breaking monitoring systems for six years, here's what I use now:

Monday 9am: Check pricing pages for top 3 competitors (5 minutes via web archive tool)

Friday 2pm: 45-minute weekly review session

- Review flagged items from monitoring tools (15 min)

- Update competitive timelines with new entries (15 min)

- Identify actions needed and create tasks (10 min)

- Review and close out previous week's actions (5 min)

Monthly (first Friday): 60-minute deep review

- Read latest earnings transcripts for public competitors (20 min)

- Review job posting changes for strategic signals (10 min)

- Update sales on material competitive changes (15 min)

- Review monitoring system effectiveness metrics (15 min)

Quarterly: Review competitor tiers based on deal data, adjust monitoring focus if patterns changed

Tools I use:

- Visualping for pricing page monitoring (automated screenshots)

- Feedly with focused RSS feeds (5 sources per competitor, max)

- Competitive timeline doc (Google Doc, one per top competitor)

- Simple spreadsheet for tracking: Date | Competitor | Change | Action Taken | Outcome

Total time invested: ~4 hours per month (3 hours weekly reviews + 1 hour monthly deep dive)

Actions taken per month: Average 9-11

Action completion rate: 83%

Sales satisfaction with competitive intel (quarterly survey): 4.2/5.0

I don't claim comprehensive coverage. I claim useful coverage. I miss competitor blog posts, social media updates, and minor announcements constantly.

But I catch the signals that matter. And I convert them into actions that help sales win deals.

That's what competitor monitoring should do. Not create activity. Create advantage.

What Most PMMs Won't Admit About Competitor Monitoring

The uncomfortable truth: most competitor monitoring systems are make-work projects that create the appearance of competitive intelligence without delivering competitive advantage.

PMMs build comprehensive monitoring systems because it feels like strategic work. They process dozens of alerts because it feels productive. They distribute weekly competitive updates because it demonstrates value.

But when you ask "Did this intel change a deal outcome?" most can't answer.

The PMMs who are honest admit: they spend hours monitoring competitors for information they never use, distributed to sales teams who never read it, supporting a program that looks impressive in quarterly reviews but doesn't move the business.

The solution isn't more monitoring. It's ruthless focus on the signals that drive action.

Monitor less. Act more. Measure outcomes, not activity.

That's the difference between competitor monitoring that generates alerts and competitor monitoring that generates wins.

Kris Carter

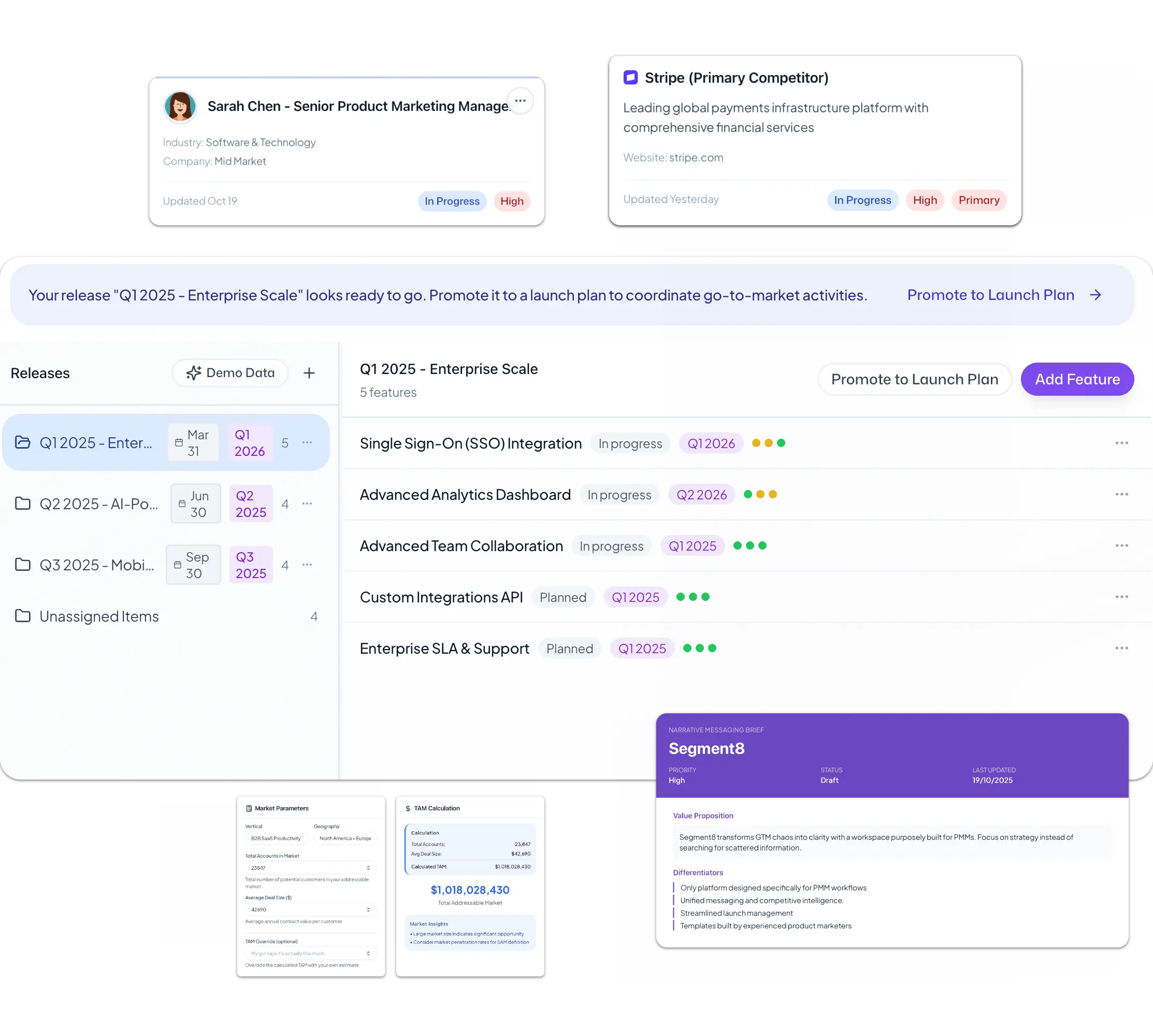

Founder, Segment8

Founder & CEO at Segment8. Former PMM leader at Procore (pre/post-IPO) and Featurespace. Spent 15+ years helping SaaS and fintech companies punch above their weight through sharp positioning and GTM strategy.

More from Competitive Intelligence

Ready to level up your GTM strategy?

See how Segment8 helps GTM teams build better go-to-market strategies, launch faster, and drive measurable impact.

Book a Demo